Knowledge Integration: RAG vs. Fine-Tuning - Which Strategy Is Right For You?

By DevDash Labs

.

Jan 7, 2025

The Challenge of Making AI Smarter

In today's dynamic technological landscape, the effective integration of knowledge within artificial intelligence (AI) systems is no longer a supplementary consideration but a core strategic imperative. For organizations seeking to derive tangible value from AI initiatives, the careful selection of an optimal knowledge integration strategy is important. This selection transcends mere technical concerns; it represents a fundamental strategic decision that can profoundly influence the performance, adaptability, and ultimately, the business impact of AI applications. Two prominent methodologies, Retrieval Augmented Generation (RAG) and Fine-Tuning, offer contrasting yet highly effective approaches to this critical challenge. This article aims to provide a comprehensive, data-driven framework to guide your organization in making informed decisions regarding these vital strategies.

RAG vs. Fine-Tuning: Core Differences

The foundational distinction between Retrieval Augmented Generation (RAG) and Fine-Tuning lies in the fundamental mechanisms through which knowledge is integrated into the AI model. A deeper examination of these mechanisms is crucial for understanding their respective strengths and limitations:

Retrieval Augmented Generation (RAG): RAG leverages external, often dynamic, knowledge bases to augment the capabilities of Large Language Models (LLMs). This involves a real-time retrieval process wherein the AI system accesses and incorporates relevant information from these external sources in response to specific queries. This process is particularly beneficial in environments where knowledge is constantly evolving. RAG is distinguished by its agile, lightweight nature, allowing for flexible adaptation and immediate updates to the knowledge sources.

Fine-Tuning: In contrast, fine-tuning involves the direct integration of knowledge into the very architecture of the AI model. This method entails the modification of the model's internal parameters based on a carefully curated dataset, which instills specific knowledge or capabilities. While offering significant gains in terms of precision, fine-tuning is inherently more resource-intensive than RAG, demanding more computational power and time for training. This makes it a suitable approach for applications that prioritize accuracy and consistency.

Strategic Advantages: When to Choose Which

Both RAG and fine-tuning have advantages that suit different needs.

Each methodology offers a unique set of strategic advantages that align with different business objectives and operational requirements. A thoughtful understanding of these advantages is critical for making well-informed strategic decisions:

RAG: Leveraging Flexibility and Adaptability

Dynamic Knowledge Updates and Transparency: One of the key strengths of RAG lies in its ability to accommodate dynamic knowledge environments. RAG allows for real-time updates to knowledge sources, enabling AI applications to incorporate the most recent information. This ensures that the AI always utilizes the most current and relevant data, while maintaining transparency of the knowledge retrieval process.

Cost-Effective Deployment and Rapid Implementation: RAG offers a more streamlined approach to implementation, which results in shorter deployment timelines and reduced infrastructure costs. This makes it a favorable option for organizations seeking to quickly implement AI solutions with limited resources.

Real-Time Knowledge Integration and Query Responsiveness: The capacity for real-time integration of new information and rapid query responsiveness makes RAG highly suitable for tasks where up-to-date knowledge is essential. RAG excels in addressing dynamic environments by seamlessly incorporating new information into its responses on-demand.

Fine-Tuning: Achieving Precision and Control

Task-Specific Performance Optimization: Fine-tuning allows for a high degree of optimization for predefined tasks by tailoring the model to specific datasets and requirements. This results in enhanced performance, delivering more precise and accurate outputs.

Brand Voice Consistency and Customization: For organizations seeking to maintain a consistent brand voice, fine-tuning offers superior control over the model's tone, style, and overall output. This allows for AI to communicate with a brand specific tone that reinforces brand identity.

Secure Data Handling and Compliance: Fine-tuning provides the ability to keep your data in house with greater security, making it a suitable choice when working with sensitive or proprietary information. This is ideal for businesses that must adhere to strict security and privacy policies.

Ideal Use Cases: Where Each Approach Shines

The practical application of RAG and fine-tuning hinges on the specific needs and requirements of each use case. Matching methodologies to appropriate contexts is crucial for achieving optimal outcomes:

When to Utilize RAG:

Knowledge Bases and Documentation Platforms: RAG is highly suitable for applications involving extensive documentation or knowledge bases that require frequent updates. This is applicable for a variety of fields where constant updates and quick references are a must.

Real-Time Information Updates and Customer Support: For applications that demand up-to-the-minute information, such as customer support systems that need to address rapidly changing trends or pricing structures, RAG provides an excellent solution by integrating real-time data into its responses.

Research and Content Aggregation: When the task involves synthesizing data from diverse and evolving sources, RAG offers a dynamic approach to information aggregation and analysis.

When to Utilize Fine-Tuning:

Compliance and Regulatory Workflows: For industries with strict regulatory requirements, fine-tuning is paramount as it enhances control and precision in model outputs, ensuring adherence to stringent standards.

Brand-Specific Customer Interactions: Organizations that prioritize a unique brand voice and tone will find fine-tuning particularly valuable in ensuring that AI-driven customer interactions consistently reflect their brand identity.

Private Data Processing Tasks: Fine-tuning provides an added layer of security when working with sensitive internal data. It makes it possible to keep your data within the confines of your own secure servers.

RAG vs. Fine-Tuning: A Peek Under the Hood

Understanding the technical architecture of RAG and fine-tuning is essential for successful implementation:

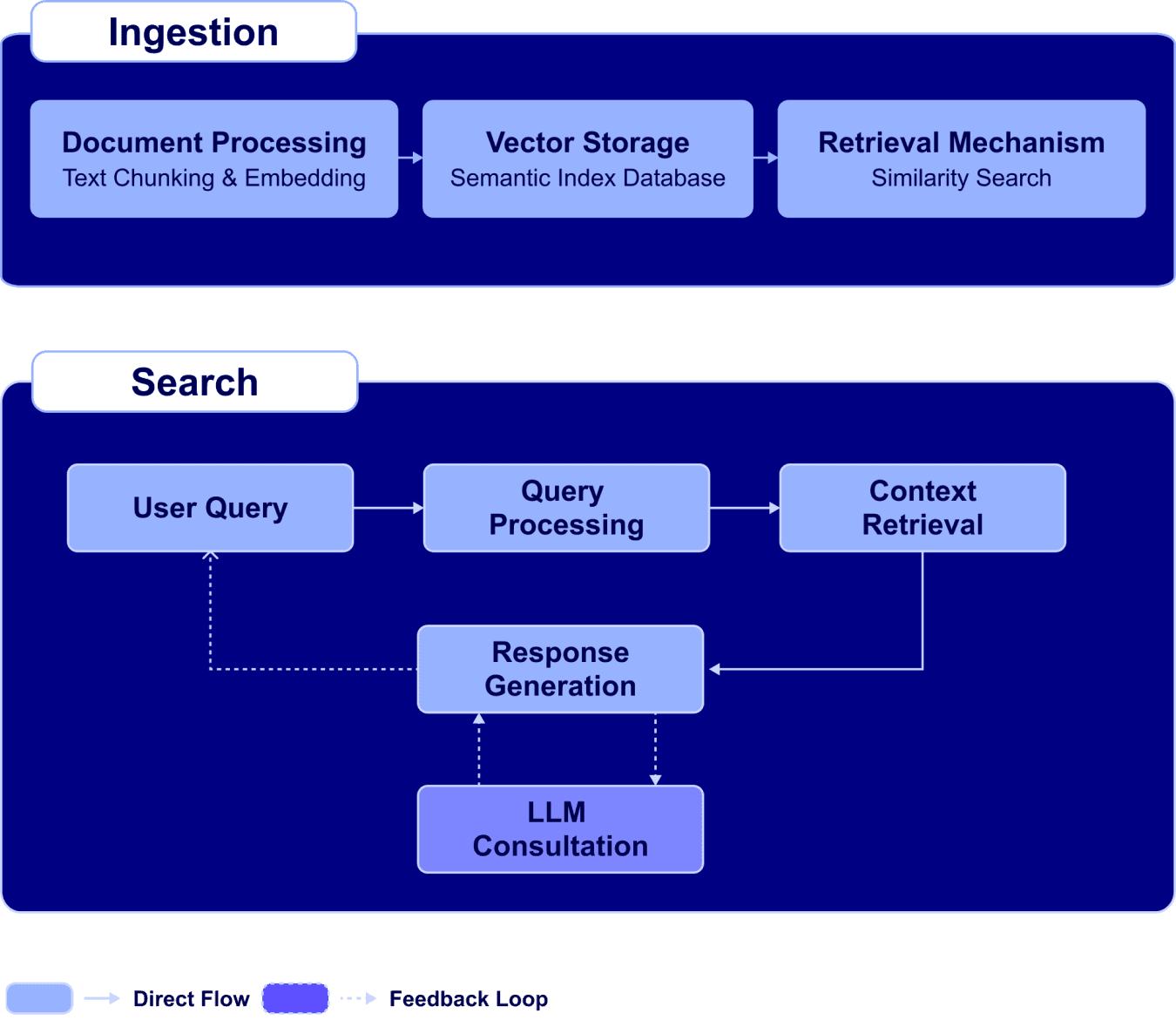

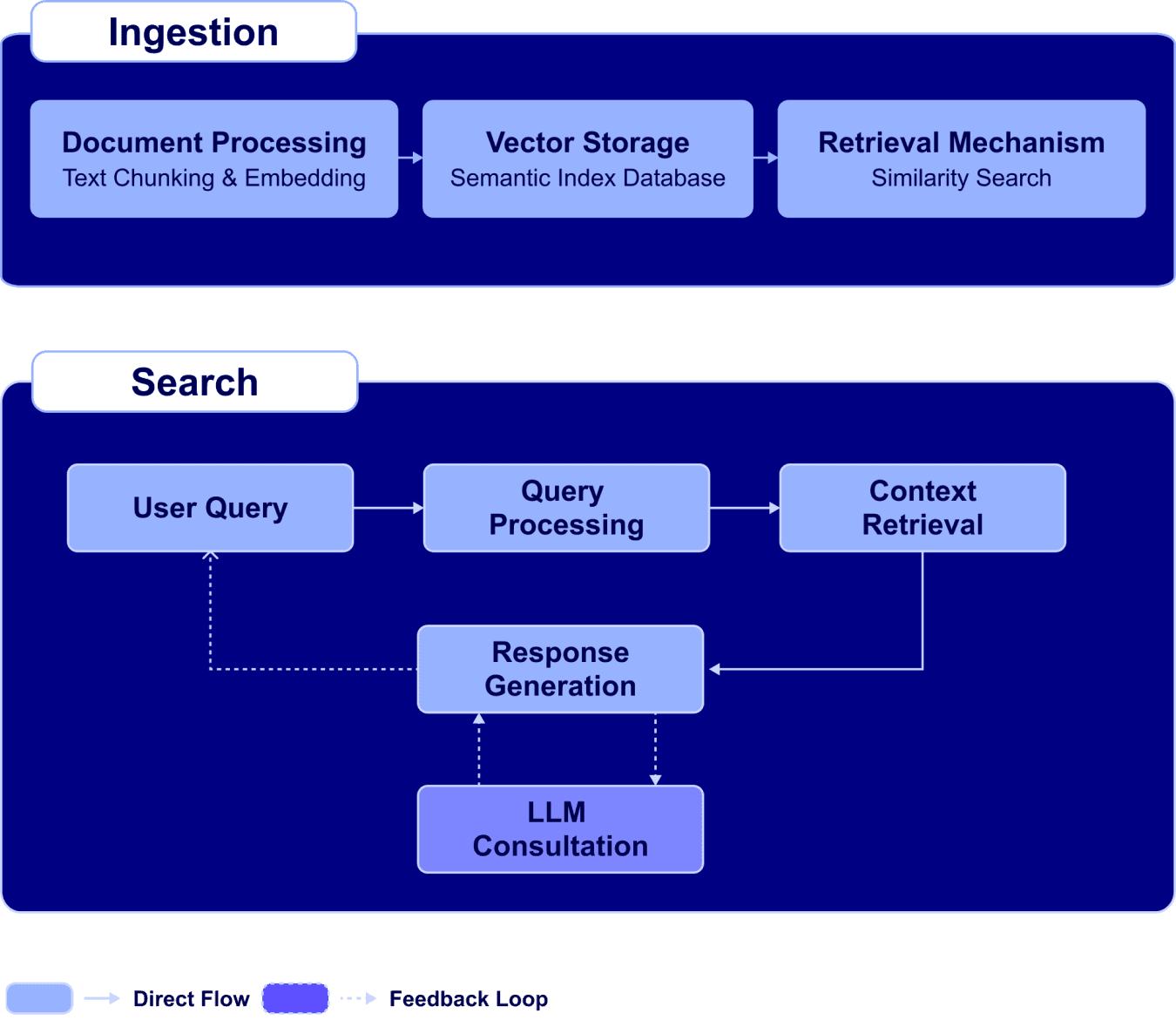

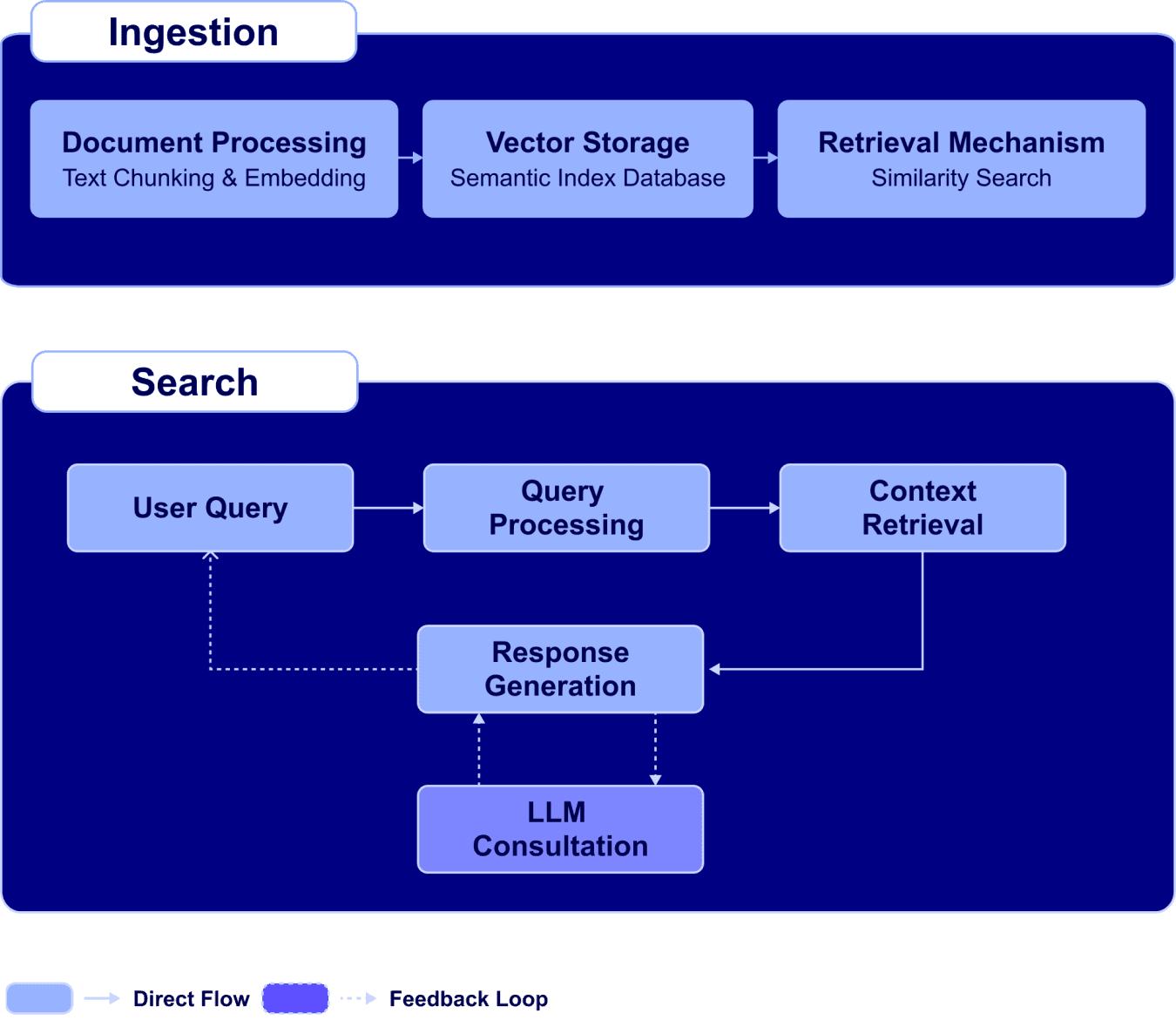

RAG Architecture:

Ingestion Phase: This involves processing documents to extract pertinent information, creating vector embeddings, and storing these embeddings within a semantic index database. This data will then be referenced by the system when processing a user query.

Search Phase: This phase begins with the user query, followed by query processing, context retrieval using similarity searches within the semantic database, response generation, and finally, LLM consultation for refinement of results.

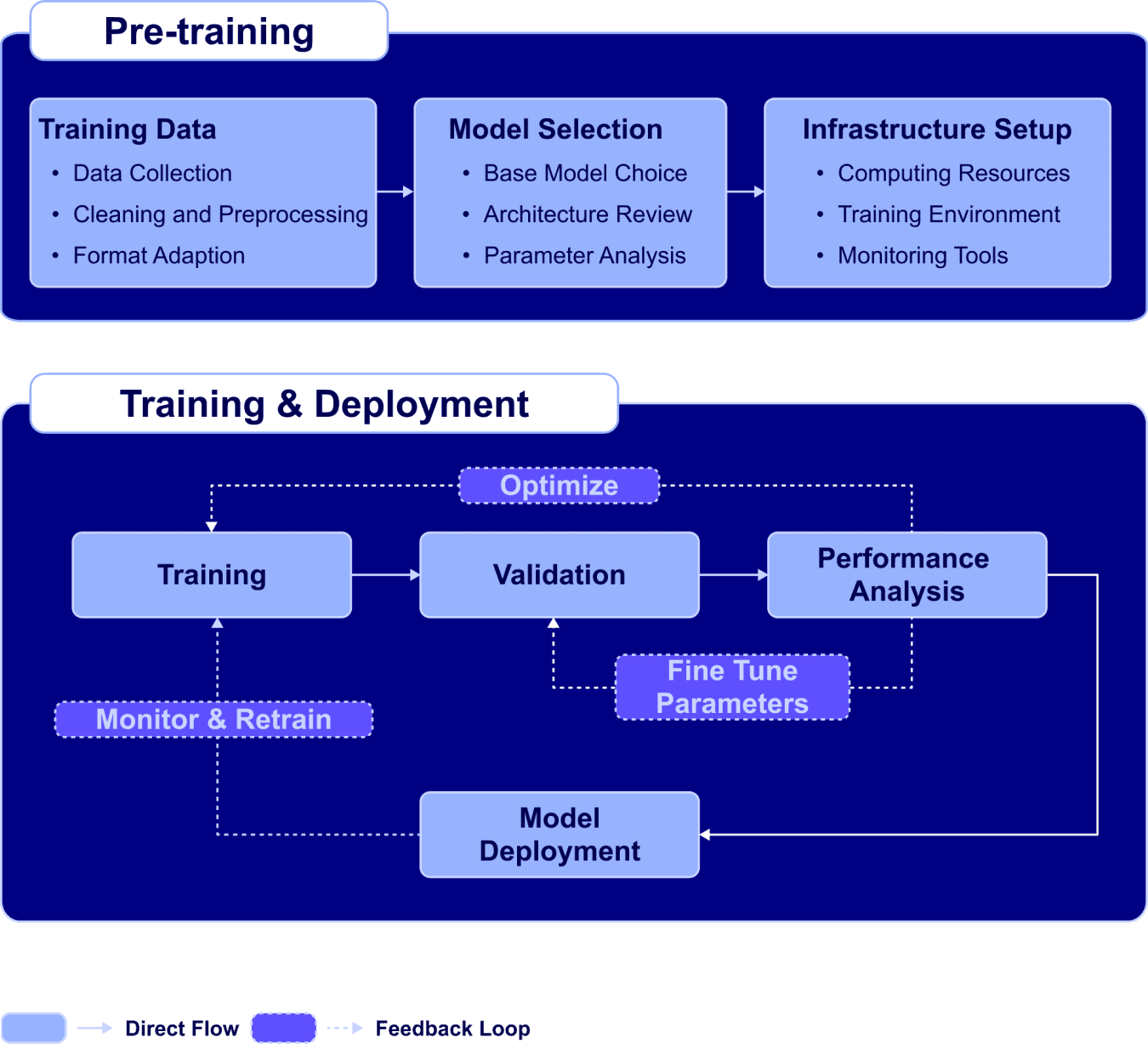

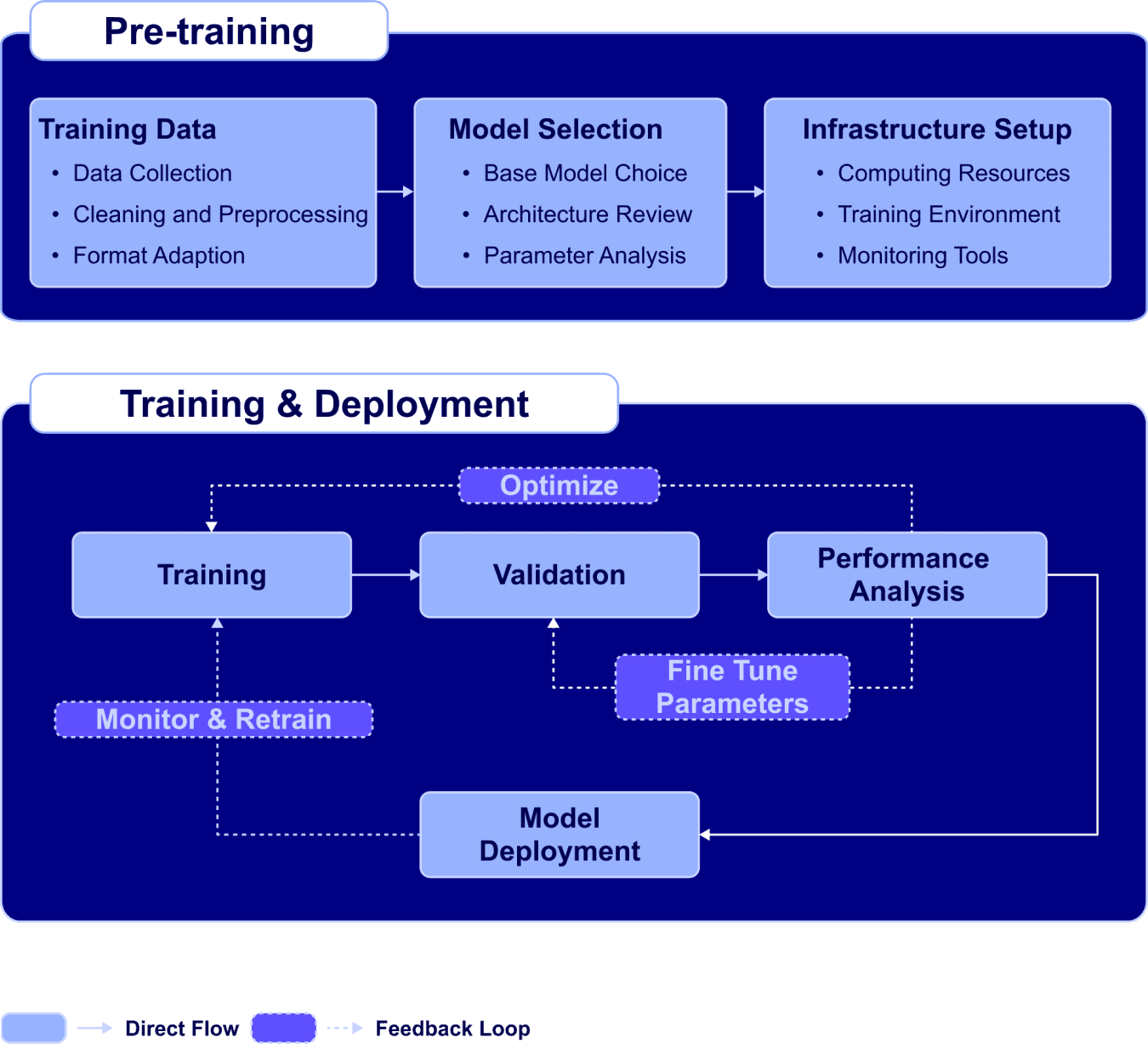

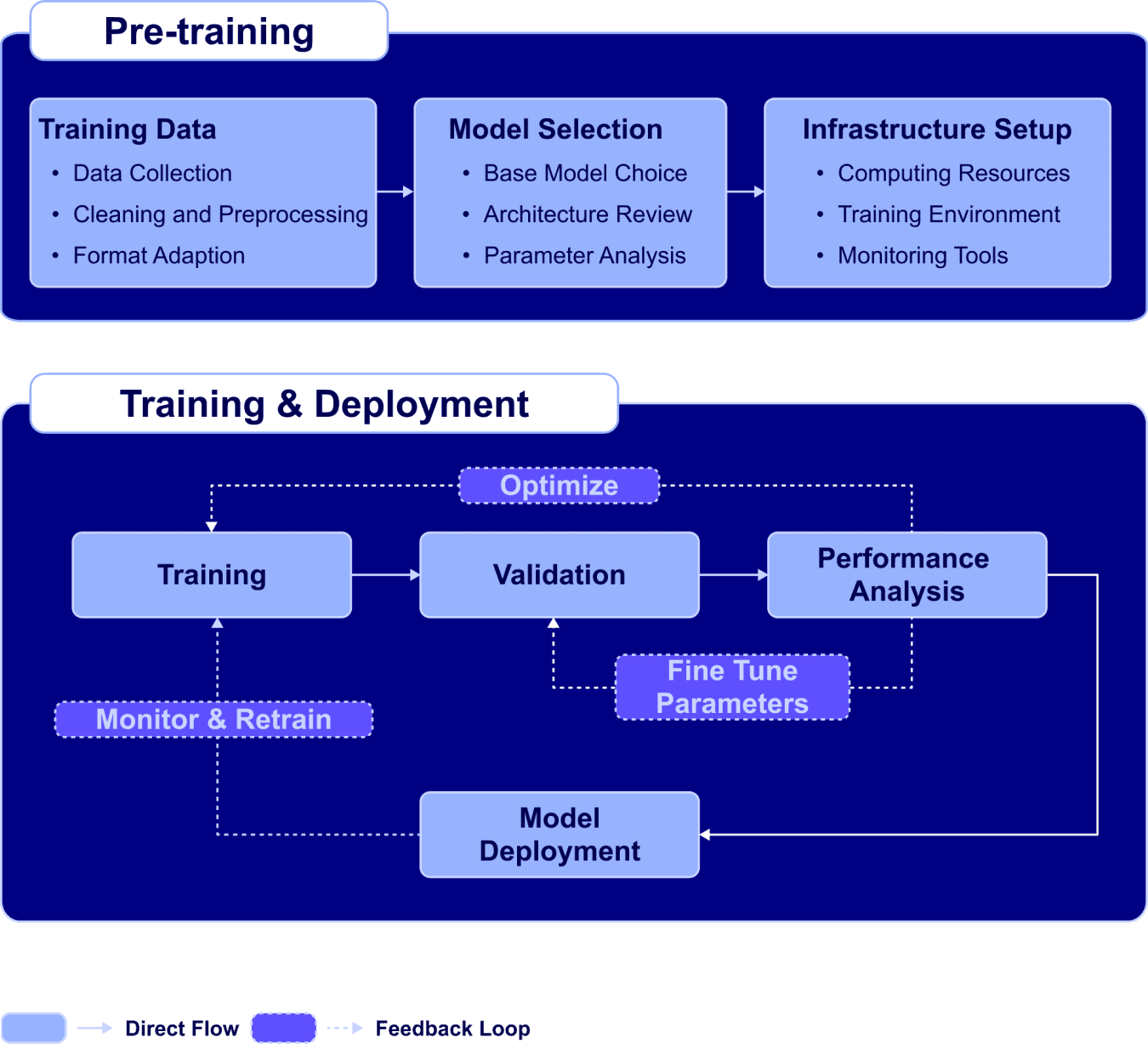

Fine-Tuning Architecture:

Pre-Training Phase: Involves meticulous planning and preparation. Data collection, cleaning and preprocessing is necessary for an efficient training process. Once the data is ready, a base model must be selected and analyzed for parameters and architecture. Once the right model has been selected the required computing and monitoring infrastructure must be set up.

Training and Deployment Phase: Includes training the model with the prepped dataset, validating its performance, optimizing specific parameters, and deploying the fine-tuned model into a production environment, also including ongoing performance analysis.

Implementation Considerations:

The practical implementation of both RAG and fine-tuning requires careful assessment of resource, timeline, and technical infrastructure needs:

Infrastructure Cost: RAG tends to be lower in cost compared to the more resource-intensive fine-tuning.

Deployment Time: RAG is typically faster, taking 2-4 weeks, while fine-tuning can take 6-12 weeks.

Update Frequency: RAG allows for real-time updates, while fine-tuned models require periodic retraining.

Technical Stack: RAG needs a vector database and document pipeline, while fine-tuning requires a GPU/TPU cluster for training and model hosting.

DevDash Decision Framework: Which is Right for You?

The selection between RAG and fine-tuning should be informed by a clear understanding of the business environment and strategic objectives:

RAG: Optimal for agile, dynamic business environments where flexibility, speed, and cost efficiency are of the utmost importance. RAG is highly suited for teams with less experience in AI, where it's imperative to rapidly adapt and update strategies based on evolving data.

Fine-Tuning: Best suited for enterprises that require high levels of precision, consistency, and control over model output. This is a favorable option for organizations that can leverage experienced AI teams and for long-term, high-value applications where careful refinement and robust accuracy are of the utmost importance.

Conclusion: Making the Smart Choice

The integration of knowledge into AI systems is an essential component of leveraging AI’s full potential. This comprehensive analysis of RAG and fine-tuning provides a framework for organizations to make informed decisions that align with their strategic objectives, resources, and timelines. By thoughtfully assessing their specific needs and requirements, organizations can choose the methodology that will best serve their business goals, ensuring they harness the power of AI in a responsible and effective manner.

The Challenge of Making AI Smarter

In today's dynamic technological landscape, the effective integration of knowledge within artificial intelligence (AI) systems is no longer a supplementary consideration but a core strategic imperative. For organizations seeking to derive tangible value from AI initiatives, the careful selection of an optimal knowledge integration strategy is important. This selection transcends mere technical concerns; it represents a fundamental strategic decision that can profoundly influence the performance, adaptability, and ultimately, the business impact of AI applications. Two prominent methodologies, Retrieval Augmented Generation (RAG) and Fine-Tuning, offer contrasting yet highly effective approaches to this critical challenge. This article aims to provide a comprehensive, data-driven framework to guide your organization in making informed decisions regarding these vital strategies.

RAG vs. Fine-Tuning: Core Differences

The foundational distinction between Retrieval Augmented Generation (RAG) and Fine-Tuning lies in the fundamental mechanisms through which knowledge is integrated into the AI model. A deeper examination of these mechanisms is crucial for understanding their respective strengths and limitations:

Retrieval Augmented Generation (RAG): RAG leverages external, often dynamic, knowledge bases to augment the capabilities of Large Language Models (LLMs). This involves a real-time retrieval process wherein the AI system accesses and incorporates relevant information from these external sources in response to specific queries. This process is particularly beneficial in environments where knowledge is constantly evolving. RAG is distinguished by its agile, lightweight nature, allowing for flexible adaptation and immediate updates to the knowledge sources.

Fine-Tuning: In contrast, fine-tuning involves the direct integration of knowledge into the very architecture of the AI model. This method entails the modification of the model's internal parameters based on a carefully curated dataset, which instills specific knowledge or capabilities. While offering significant gains in terms of precision, fine-tuning is inherently more resource-intensive than RAG, demanding more computational power and time for training. This makes it a suitable approach for applications that prioritize accuracy and consistency.

Strategic Advantages: When to Choose Which

Both RAG and fine-tuning have advantages that suit different needs.

Each methodology offers a unique set of strategic advantages that align with different business objectives and operational requirements. A thoughtful understanding of these advantages is critical for making well-informed strategic decisions:

RAG: Leveraging Flexibility and Adaptability

Dynamic Knowledge Updates and Transparency: One of the key strengths of RAG lies in its ability to accommodate dynamic knowledge environments. RAG allows for real-time updates to knowledge sources, enabling AI applications to incorporate the most recent information. This ensures that the AI always utilizes the most current and relevant data, while maintaining transparency of the knowledge retrieval process.

Cost-Effective Deployment and Rapid Implementation: RAG offers a more streamlined approach to implementation, which results in shorter deployment timelines and reduced infrastructure costs. This makes it a favorable option for organizations seeking to quickly implement AI solutions with limited resources.

Real-Time Knowledge Integration and Query Responsiveness: The capacity for real-time integration of new information and rapid query responsiveness makes RAG highly suitable for tasks where up-to-date knowledge is essential. RAG excels in addressing dynamic environments by seamlessly incorporating new information into its responses on-demand.

Fine-Tuning: Achieving Precision and Control

Task-Specific Performance Optimization: Fine-tuning allows for a high degree of optimization for predefined tasks by tailoring the model to specific datasets and requirements. This results in enhanced performance, delivering more precise and accurate outputs.

Brand Voice Consistency and Customization: For organizations seeking to maintain a consistent brand voice, fine-tuning offers superior control over the model's tone, style, and overall output. This allows for AI to communicate with a brand specific tone that reinforces brand identity.

Secure Data Handling and Compliance: Fine-tuning provides the ability to keep your data in house with greater security, making it a suitable choice when working with sensitive or proprietary information. This is ideal for businesses that must adhere to strict security and privacy policies.

Ideal Use Cases: Where Each Approach Shines

The practical application of RAG and fine-tuning hinges on the specific needs and requirements of each use case. Matching methodologies to appropriate contexts is crucial for achieving optimal outcomes:

When to Utilize RAG:

Knowledge Bases and Documentation Platforms: RAG is highly suitable for applications involving extensive documentation or knowledge bases that require frequent updates. This is applicable for a variety of fields where constant updates and quick references are a must.

Real-Time Information Updates and Customer Support: For applications that demand up-to-the-minute information, such as customer support systems that need to address rapidly changing trends or pricing structures, RAG provides an excellent solution by integrating real-time data into its responses.

Research and Content Aggregation: When the task involves synthesizing data from diverse and evolving sources, RAG offers a dynamic approach to information aggregation and analysis.

When to Utilize Fine-Tuning:

Compliance and Regulatory Workflows: For industries with strict regulatory requirements, fine-tuning is paramount as it enhances control and precision in model outputs, ensuring adherence to stringent standards.

Brand-Specific Customer Interactions: Organizations that prioritize a unique brand voice and tone will find fine-tuning particularly valuable in ensuring that AI-driven customer interactions consistently reflect their brand identity.

Private Data Processing Tasks: Fine-tuning provides an added layer of security when working with sensitive internal data. It makes it possible to keep your data within the confines of your own secure servers.

RAG vs. Fine-Tuning: A Peek Under the Hood

Understanding the technical architecture of RAG and fine-tuning is essential for successful implementation:

RAG Architecture:

Ingestion Phase: This involves processing documents to extract pertinent information, creating vector embeddings, and storing these embeddings within a semantic index database. This data will then be referenced by the system when processing a user query.

Search Phase: This phase begins with the user query, followed by query processing, context retrieval using similarity searches within the semantic database, response generation, and finally, LLM consultation for refinement of results.

Fine-Tuning Architecture:

Pre-Training Phase: Involves meticulous planning and preparation. Data collection, cleaning and preprocessing is necessary for an efficient training process. Once the data is ready, a base model must be selected and analyzed for parameters and architecture. Once the right model has been selected the required computing and monitoring infrastructure must be set up.

Training and Deployment Phase: Includes training the model with the prepped dataset, validating its performance, optimizing specific parameters, and deploying the fine-tuned model into a production environment, also including ongoing performance analysis.

Implementation Considerations:

The practical implementation of both RAG and fine-tuning requires careful assessment of resource, timeline, and technical infrastructure needs:

Infrastructure Cost: RAG tends to be lower in cost compared to the more resource-intensive fine-tuning.

Deployment Time: RAG is typically faster, taking 2-4 weeks, while fine-tuning can take 6-12 weeks.

Update Frequency: RAG allows for real-time updates, while fine-tuned models require periodic retraining.

Technical Stack: RAG needs a vector database and document pipeline, while fine-tuning requires a GPU/TPU cluster for training and model hosting.

DevDash Decision Framework: Which is Right for You?

The selection between RAG and fine-tuning should be informed by a clear understanding of the business environment and strategic objectives:

RAG: Optimal for agile, dynamic business environments where flexibility, speed, and cost efficiency are of the utmost importance. RAG is highly suited for teams with less experience in AI, where it's imperative to rapidly adapt and update strategies based on evolving data.

Fine-Tuning: Best suited for enterprises that require high levels of precision, consistency, and control over model output. This is a favorable option for organizations that can leverage experienced AI teams and for long-term, high-value applications where careful refinement and robust accuracy are of the utmost importance.

Conclusion: Making the Smart Choice

The integration of knowledge into AI systems is an essential component of leveraging AI’s full potential. This comprehensive analysis of RAG and fine-tuning provides a framework for organizations to make informed decisions that align with their strategic objectives, resources, and timelines. By thoughtfully assessing their specific needs and requirements, organizations can choose the methodology that will best serve their business goals, ensuring they harness the power of AI in a responsible and effective manner.

The Challenge of Making AI Smarter

In today's dynamic technological landscape, the effective integration of knowledge within artificial intelligence (AI) systems is no longer a supplementary consideration but a core strategic imperative. For organizations seeking to derive tangible value from AI initiatives, the careful selection of an optimal knowledge integration strategy is important. This selection transcends mere technical concerns; it represents a fundamental strategic decision that can profoundly influence the performance, adaptability, and ultimately, the business impact of AI applications. Two prominent methodologies, Retrieval Augmented Generation (RAG) and Fine-Tuning, offer contrasting yet highly effective approaches to this critical challenge. This article aims to provide a comprehensive, data-driven framework to guide your organization in making informed decisions regarding these vital strategies.

RAG vs. Fine-Tuning: Core Differences

The foundational distinction between Retrieval Augmented Generation (RAG) and Fine-Tuning lies in the fundamental mechanisms through which knowledge is integrated into the AI model. A deeper examination of these mechanisms is crucial for understanding their respective strengths and limitations:

Retrieval Augmented Generation (RAG): RAG leverages external, often dynamic, knowledge bases to augment the capabilities of Large Language Models (LLMs). This involves a real-time retrieval process wherein the AI system accesses and incorporates relevant information from these external sources in response to specific queries. This process is particularly beneficial in environments where knowledge is constantly evolving. RAG is distinguished by its agile, lightweight nature, allowing for flexible adaptation and immediate updates to the knowledge sources.

Fine-Tuning: In contrast, fine-tuning involves the direct integration of knowledge into the very architecture of the AI model. This method entails the modification of the model's internal parameters based on a carefully curated dataset, which instills specific knowledge or capabilities. While offering significant gains in terms of precision, fine-tuning is inherently more resource-intensive than RAG, demanding more computational power and time for training. This makes it a suitable approach for applications that prioritize accuracy and consistency.

Strategic Advantages: When to Choose Which

Both RAG and fine-tuning have advantages that suit different needs.

Each methodology offers a unique set of strategic advantages that align with different business objectives and operational requirements. A thoughtful understanding of these advantages is critical for making well-informed strategic decisions:

RAG: Leveraging Flexibility and Adaptability

Dynamic Knowledge Updates and Transparency: One of the key strengths of RAG lies in its ability to accommodate dynamic knowledge environments. RAG allows for real-time updates to knowledge sources, enabling AI applications to incorporate the most recent information. This ensures that the AI always utilizes the most current and relevant data, while maintaining transparency of the knowledge retrieval process.

Cost-Effective Deployment and Rapid Implementation: RAG offers a more streamlined approach to implementation, which results in shorter deployment timelines and reduced infrastructure costs. This makes it a favorable option for organizations seeking to quickly implement AI solutions with limited resources.

Real-Time Knowledge Integration and Query Responsiveness: The capacity for real-time integration of new information and rapid query responsiveness makes RAG highly suitable for tasks where up-to-date knowledge is essential. RAG excels in addressing dynamic environments by seamlessly incorporating new information into its responses on-demand.

Fine-Tuning: Achieving Precision and Control

Task-Specific Performance Optimization: Fine-tuning allows for a high degree of optimization for predefined tasks by tailoring the model to specific datasets and requirements. This results in enhanced performance, delivering more precise and accurate outputs.

Brand Voice Consistency and Customization: For organizations seeking to maintain a consistent brand voice, fine-tuning offers superior control over the model's tone, style, and overall output. This allows for AI to communicate with a brand specific tone that reinforces brand identity.

Secure Data Handling and Compliance: Fine-tuning provides the ability to keep your data in house with greater security, making it a suitable choice when working with sensitive or proprietary information. This is ideal for businesses that must adhere to strict security and privacy policies.

Ideal Use Cases: Where Each Approach Shines

The practical application of RAG and fine-tuning hinges on the specific needs and requirements of each use case. Matching methodologies to appropriate contexts is crucial for achieving optimal outcomes:

When to Utilize RAG:

Knowledge Bases and Documentation Platforms: RAG is highly suitable for applications involving extensive documentation or knowledge bases that require frequent updates. This is applicable for a variety of fields where constant updates and quick references are a must.

Real-Time Information Updates and Customer Support: For applications that demand up-to-the-minute information, such as customer support systems that need to address rapidly changing trends or pricing structures, RAG provides an excellent solution by integrating real-time data into its responses.

Research and Content Aggregation: When the task involves synthesizing data from diverse and evolving sources, RAG offers a dynamic approach to information aggregation and analysis.

When to Utilize Fine-Tuning:

Compliance and Regulatory Workflows: For industries with strict regulatory requirements, fine-tuning is paramount as it enhances control and precision in model outputs, ensuring adherence to stringent standards.

Brand-Specific Customer Interactions: Organizations that prioritize a unique brand voice and tone will find fine-tuning particularly valuable in ensuring that AI-driven customer interactions consistently reflect their brand identity.

Private Data Processing Tasks: Fine-tuning provides an added layer of security when working with sensitive internal data. It makes it possible to keep your data within the confines of your own secure servers.

RAG vs. Fine-Tuning: A Peek Under the Hood

Understanding the technical architecture of RAG and fine-tuning is essential for successful implementation:

RAG Architecture:

Ingestion Phase: This involves processing documents to extract pertinent information, creating vector embeddings, and storing these embeddings within a semantic index database. This data will then be referenced by the system when processing a user query.

Search Phase: This phase begins with the user query, followed by query processing, context retrieval using similarity searches within the semantic database, response generation, and finally, LLM consultation for refinement of results.

Fine-Tuning Architecture:

Pre-Training Phase: Involves meticulous planning and preparation. Data collection, cleaning and preprocessing is necessary for an efficient training process. Once the data is ready, a base model must be selected and analyzed for parameters and architecture. Once the right model has been selected the required computing and monitoring infrastructure must be set up.

Training and Deployment Phase: Includes training the model with the prepped dataset, validating its performance, optimizing specific parameters, and deploying the fine-tuned model into a production environment, also including ongoing performance analysis.

Implementation Considerations:

The practical implementation of both RAG and fine-tuning requires careful assessment of resource, timeline, and technical infrastructure needs:

Infrastructure Cost: RAG tends to be lower in cost compared to the more resource-intensive fine-tuning.

Deployment Time: RAG is typically faster, taking 2-4 weeks, while fine-tuning can take 6-12 weeks.

Update Frequency: RAG allows for real-time updates, while fine-tuned models require periodic retraining.

Technical Stack: RAG needs a vector database and document pipeline, while fine-tuning requires a GPU/TPU cluster for training and model hosting.

DevDash Decision Framework: Which is Right for You?

The selection between RAG and fine-tuning should be informed by a clear understanding of the business environment and strategic objectives:

RAG: Optimal for agile, dynamic business environments where flexibility, speed, and cost efficiency are of the utmost importance. RAG is highly suited for teams with less experience in AI, where it's imperative to rapidly adapt and update strategies based on evolving data.

Fine-Tuning: Best suited for enterprises that require high levels of precision, consistency, and control over model output. This is a favorable option for organizations that can leverage experienced AI teams and for long-term, high-value applications where careful refinement and robust accuracy are of the utmost importance.

Conclusion: Making the Smart Choice

The integration of knowledge into AI systems is an essential component of leveraging AI’s full potential. This comprehensive analysis of RAG and fine-tuning provides a framework for organizations to make informed decisions that align with their strategic objectives, resources, and timelines. By thoughtfully assessing their specific needs and requirements, organizations can choose the methodology that will best serve their business goals, ensuring they harness the power of AI in a responsible and effective manner.